Pushing the limits of LLMs and GenAI: My Journey to an AI Tennis Coach App

A look at how a data scientist leveraged cutting-edge large language models, code assistants and AI image, audio and video generation tools to build an innovative tennis coach app. This blog highlights the journey, tools, and hands-on techniques that made it all possible.

TENNISAIGENAIDEVELOPMENT

1/15/20256 min read

It’s been an interesting couple of years as I explored the frontiers of AI via a project close to my heart - creating an AI-powered tennis coaching app. I’m not a coach myself, but I do love tennis—and I’m also a data scientist who can’t resist exploring new ways AI can make life more fun and educational.

As with all technology I feel you can most easily find the limits of what its capable of when you try and make a concrete product. For me that was exploring how AI could be used to coach tennis players and improve their game.

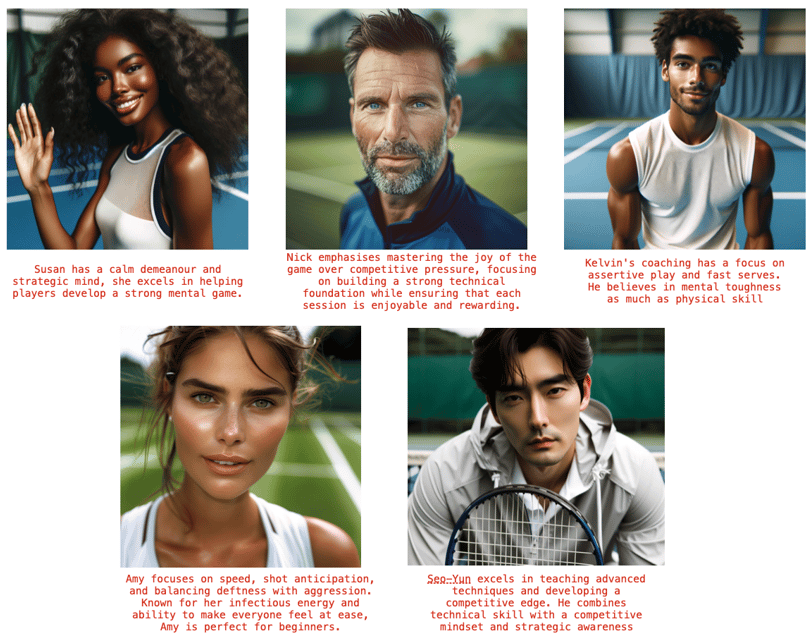

The first thing I needed to test was whether I could make convincing coaches.

1. Creating Coaches with Midjourney

Initially, I tried OpenAI's DALL·E for creating images of the tennis coaches, but I found Midjourney was better at consistency—important if you need multiple shots of the same “person". The advantage of Midjourney is it allows you to iterate on a particular face and get slight variations of it until exactly the "person" you want is found. I refined the prompts, adjusting details until I got coach images that felt realistic and full of character. These can be seen in the image above.

2. Breathing life into the Coaches with Pika

In 2024 a new set of genAI tools started to become available that could generate short video. The first I got my hands on was from Pika Labs.

Pika lets you start from an image and "paint" areas of the image that you want to move and then define the strength and direction of movement, in the example short video below the hair was painted and marked for strong ambient motion.

[An AI generated video clip with motion]

3. Using GPT-4 and Other Language Models for Tennis Content

One of the earliest steps was producing the core content of explanations of the major tennis shots—serve, forehand, backhand, volley. I needed clear explanations of the key phases e.g.:

Backswing

Contact

Follow-through, etc.

Instead of writing all of that from scratch, I used OpenAI's ChatGPT-4 to draft text that explains these phases in a friendly, coach-like tone. Of course, it has to be right - the early models like GPT3.5 occasionally got things wrong but after validating the content and testing my coaches with some difficult questions on tennis rules and very specific coaching tips I gained confidence that the latest models are giving true and valuable advice.

Then I prompted the LLM to write introductory monologues for various “coach personalities,” so users could pick the style they liked best, whether it’s a strict instructor, a laid-back mentor, or something else entirely.

4. Generating Audio Coaches with ElevenLabs

Once the scripts were written, I turned to ElevenLabs to create the actual voices. I purchased voice credits and used the Python script to call their API for each combination of coach personality and script segment. This gave me a nice collection of audio clips that sounded like real coaches with emotional depth and tonality.

5. Turning Faces + Audio into Videos with Runway

One of the best widely available video generation tools I've had access to (OpenAI's SORA is not yet available in the UK) is Runway - a creative tool that turns text prompts into images, videos, or edits with ease, using models like Stable Diffusion. I used Runway to merge my newly generated faces with the audio; I fed Runway LipSync the static profile shots and the voice files, and it created simple videos where the coach introduces themselves.

[A short clip of a coach introducing themselves]

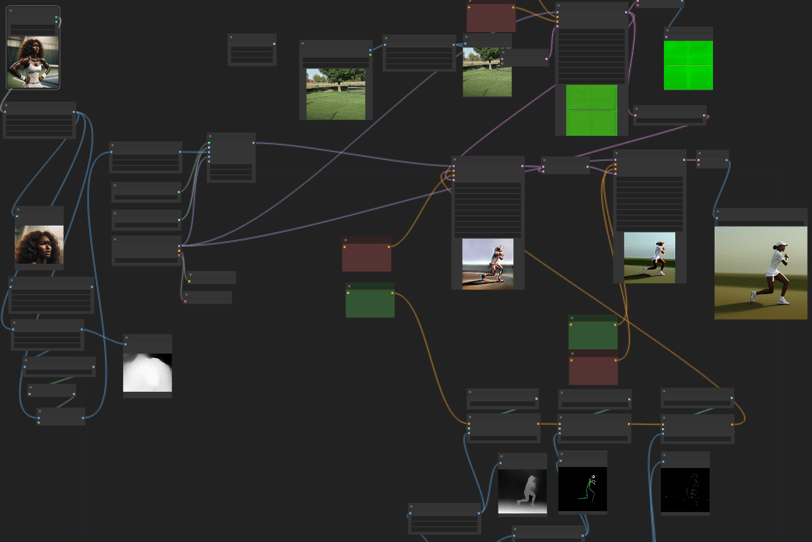

6. Creating Custom Tennis Shot Images Using ComfyUI and Stability Diffusion

For the actual tennis shot illustrations, I wanted to ensure I wasn’t infringing on anyone’s photography or player rights. Instead, I sketched out stick figures based on pro players’ positions and used those as a reference. I then combined these stick figures, the coach profile images, and background elements with ComfyUI (using Stability Diffusion under the hood) to create brand-new, fully original images.

Shoutout to NerdyRodent for tutorials and workflows: [NerdyRodent link]

[image showing the ComfyUI interface with nodes for face, background and pose extract assignment to create an image of one of our coaches demonstrating a tennis shot]

7. The Heart of the App: Multimedia LLM Coaching

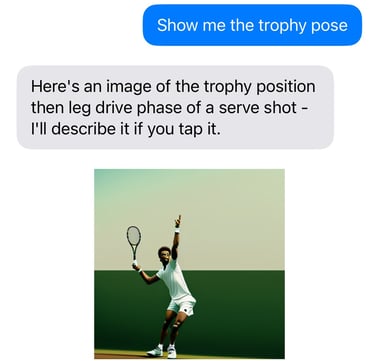

On the software side, I built an iOS interface that connects to either the OpenAI GPT API or Claude’s Sonnet API (depending on which model currently gives the best balance of cost and performance). The chat is the main place where a user can have a conversation with a chosen coach persona. I set up a backend server to act as an intermediary, allowing me to manage the conversation flow and apply system prompts.

Memory / Summaries: After each session, the chat summary is turned into structured JSON. This helps the coach “remember” user preferences, goals, and past advice in subsequent chats.

Function Calling: When a user wants to see a visual (e.g., “Show me how the backswing should look in a volley”), the LLM triggers a function call. That function returns the correct image, and if the user taps on it, they can hear the coach audio describing that position.

[partial screenshot of the app during a chat with the coach - the LLM has decided to retrieve an image]

9. "Old-Fashioned ML" for Shot Classification

Meanwhile, I have a simpler action classifier that tries to categorise each clip into serve, forehand, backhand, or volley based. The classifier was trained in Apple's CreateML and I trained it on a small training set of video clips I took of family and friends. The model analyses tennis shots by processing video frames, extracting human body poses using Apple's Vision framework, and predicting shot types (e.g., forehand, backhand). It leverages a pipeline that splits videos into frames, detects poses for each frame, and matches the motion patterns against predefined classifications.

It’s not perfect and still labeled “beta,” but it shows promise.

10. Building the iOS App with ChatGPT, Claude, and O1

I leaned heavily on AI for coding assistance, starting with ChatGPT for setting up views in Swift. As the codebase grew, I switched to Claude Sonnet 3.5, which was better at managing larger project files and referencing them consistently. Recently, I’ve tested the O1 model for more difficult coding logic.

The workflow looked like this:

Generate Swift code using the LLM.

Copy/paste it into Xcode.

Debug compilation errors.

Repeat until it worked smoothly on simulators and real devices.

Share logs with the LLM whenever I got stuck, which saved me hours of guesswork.

Future Steps: Video Understanding

Right now, my approach is limited to single-frame analysis. The next leap is real video understanding, which doesn’t seem fully baked yet, even as of early 2025. Once LLMs can process an entire video clip natively, we’ll see deeper coaching feedback on footwork and swing timing in real time.

Final Thoughts

Over two years, I’ve seen LLMs improve rapidly in handling text, images, and even multi-turn conversations. I also learned a lot about how to incorporate these models in a careful way—keeping human oversight at key points like code debugging and image checks.

The result is something I’m proud of: a tennis coach app that feels surprisingly personal, thanks to the synergy of text, audio, images, and a bit of real-time pose analysis. It’s a blend of human effort, AI-generated content, and iterative refinement—much like perfecting a tennis stroke itself.

Thanks for reading! If you’re curious about any of the tools or techniques mentioned, check the links above or stay tuned for future updates on full video analysis and ongoing improvements in tennis shot classification.

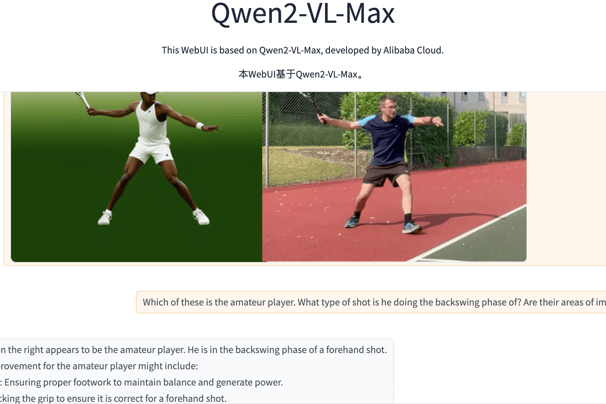

8. Visual Language Models and Pose Detection

To really push boundaries, I wanted the app to analyse user-uploaded images (stills from their swing videos) and compare them against reference images of a pro. I used pose detection to crop around the user and highlight their posture. Then the LLM compares the user’s pose to the pro’s pose and gives personalised advice.

This is possible thanks to the recent improvements in vision-augmented LLMs. Over the past two years, the progress has been rapid—these models went from “barely accurate” to “surprisingly capable,” with open-source models like Paligemma or Qwen-2.5-72B about 9 months behind the closed models from OpenAI, Google and Anthropic:

[Example of an opensource Visual LM interpreting tennis pose images - HuggingFace Spaces lets you try ]

Contacts

humanabilityai@gmail.com